S4 project 2018 : A reinforcement learning approach based on imitation using kinect and Pepper.

Summary

The project aims to be the first steps for a development of a more general learn-by-imitation approach for autistic children. It was developed in collaboration with the CHU Brest, more specifically with Dr. Nathalie Collot as the main contact.

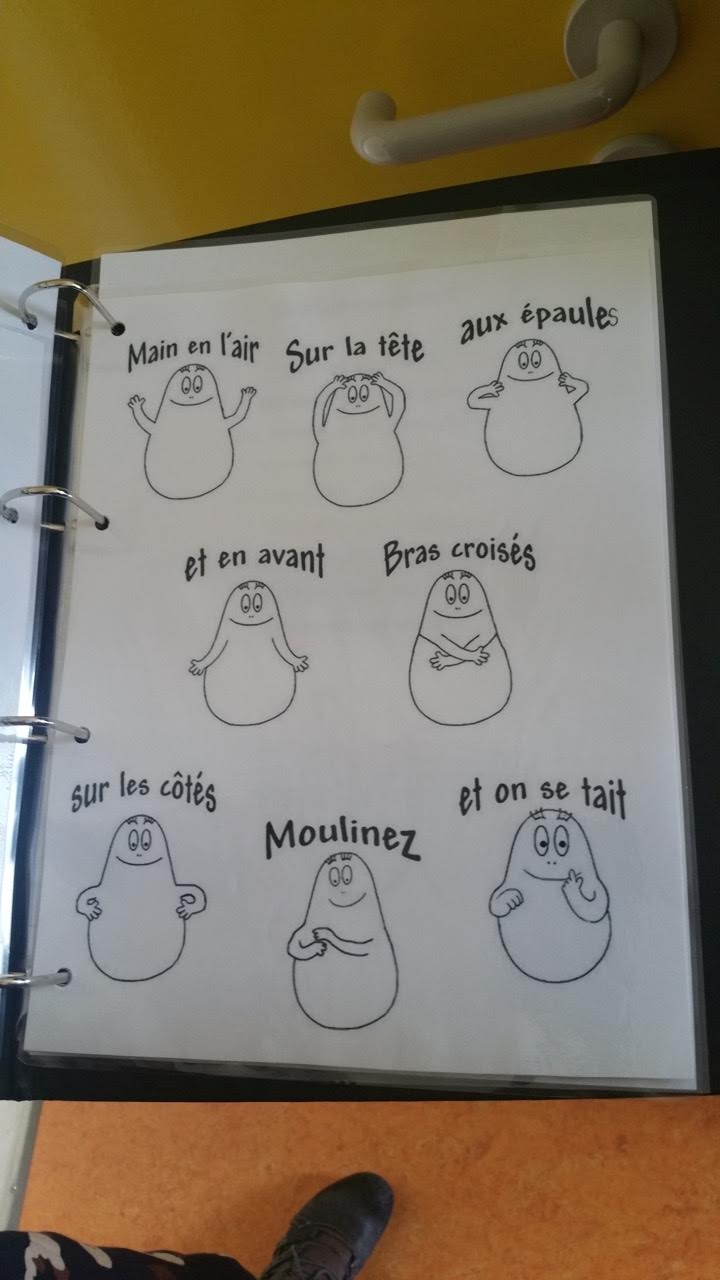

The main goal was to simulate a well-known nursery rhyme used by Ms Collot's team to interact with the patients. The song is divided up in several sections, where the entertainer would assume a pre-defined posture that the kids should try to imitate.

The gestures currently used are shown in the image below:

As such, the project is built on top of two main pillars, namely:

- Body pose detection: Windows Kinect V1 was the sensor chosen for this task.

- Robot control and synchronization.

Body pose detection was entirely done using publicly available software. Thus, the main focus of our work was to familiarize ourselves with the robot itself and synchronize all the tasks, as well as fine-tune everything, taking into account the nature of the project and the target subjects.

The chosen framework was ROS (the only tested version was kinetic), written mainly in C++, with some parts in python.

Installation and Set Up

The code is fully available here. The repo should be cloned directly into the src folder of a catkin workspace. The repository above contains two ROS packages at the root folder level:

- openni_tracker: skeleton tracker for use with kinect. This was included in the repository, as no kinetic version is available in the official repos.

- pepper_imitation: the system developed by the S4 team.

Dependencies (and even ROS itself if not present in the system), can be installed running installDependencies.sh in pepper_imitation/installDependencies.sh.

Running the game

The game sequence can be started by running the roslaunch file pepper_imitation_game.launch. The skeleton tracker and the kinect driver are to be run separately using the file pepper_imitation_kinect.launch.

Body pose checks can be disabled in pepper_imitation_node.launch, setting the “skip_pose_checks” parameter to true. If disabled, the game will assume all the checks to be correct.

Architecture

The system is made up of the following nodes:

- pepper_audio_player_node: offers an interface capable of loading, starting and stopping and audio file saved in Pepper's internal computer. It reports back the current playing time as well.

- pepper_face_tracker_node: enable/disable Pepper's built-in random face tracking.

- pepper_tts_node: interface to use Pepper's TTS engine. It supports emotional speech as well (e.g: style=joyful).

- pepper_tablet_node: pops up an input box for the user to type his name before starting the game.

- pepper_imitation_node: command the different gestures and checks if the detected person's pose - if any - is similar.

- pepper_teleop_joy_node: allows to control the robot's movement and rotation using a joystick. Keep in mind that the default joystick's values defined in the node have been set up to match those of a wired Xbox controller.

All these nodes can be run separately and commanded by publishing in their respective topics, allowing a Wizard of Oz-ish level of control. The actual game and synchronization is achieved by means of a state machine, defined in “pepper_imitation_game_node.py” file. SMACH was the library chosen for this.

Game Flow

The expected behaviour is as follows:

- Pepper says hi, welcome the users to IMT Atlantique, and invites them to click on the tablet to enter a name.

- When the user clicks, an input dialog will appear after a few seconds.

- After entering his name, the music starts playing and the game goes into its main loop.

- Pepper prompts the user to do as him, and assumes a given pose sync'd with the music.

- Now, two possible scenarios can follow:

- The player adopts a pose similar enough. Then Pepper will encourage him to keep going, and the song continues.

- The player does not make a similar pose, or the body pose is not detected properly. In this case, Pepper will ask the player to focus and try again, and the music will go back to the previous part.

- If no skeleton at all is found, Pepper will tell the user that it cannot find him. Two more additional sub-scenarios are possible here:

- The user is detected again after a while. Pepper informs the player and the game continues.

- If no skeleton is found after a while the game is stopped.

- When all the gestures are done and the music ends, Pepper thanks the user and the game goes back to its first state. The user can then click on the tablet again to re-start the game without having to input his name again.

Known Issues and Limitations

- The synchronization process is hardcoded. As such, correct synchronization will only be achieved if the same file is used (it is available in the robot's internal memory in /home/pepper/resources/audio), The state machine waits until the file is played to a given time (usually the time when a new gesture is started).

- The way openni_tracker publish the skeleton information (using TF frames following the convention <body_part>_<id>, for example torso_1) poses some problems when people are lost and new ids are assigned. This is due to old TF frames still being listed by ROS API even after a while. An easy solution to this would be to query the TF frames, but keep only those with the highest id values.

- As of now, only one person is tracked. However, adding multiple person's body poses verification should be rather trivial.

Future Work

- The detection provided by the kinect and the openni libraries is not enough. The calibration phase is a limiting factor when interacting with the subjects. A possible solution to this would be to adopt body pose detection based on RGB data alone. An example of this would be OpenPose. This particular game could be reproduced entirely using only joints angles data in 2D detections, even allowing us to get rid of the external kinect completely, and using the built-in cameras. More complex scenarios and gestures may need visual and depth data fusion. For a real time application, a GPU should be used.

- The concept of this game can be generalized a bit, using some simple config files. These files could define the audio file to be used and the time when each posture should be adopted. Postures may be defined here as well.

- Furthermore, these files could be generated using a user-friendly GUI, where the user could set an audio file and set up the sync times and the robot poses.

Contact

Álvaro Páez Guerra

paezguerraalvaro@gmail.com