Table of Contents

Poppy-Kine : S5 project 2015-2016

Overview

The main objective of this project is to help patients with functional reeducation exercices.

The main objective of this project is to help patients with functional reeducation exercices.

Patient A's kinesitherapist will show him/her some movements that A will need to repeat more or less regularly, at home and without the doctor.

Therefore, for better memorization, the movement will be done by a Poppy Robot (see Poppy project and Poppy robot wiki page).

First, the moves are done in front of a Kinect camera (v2).

The movements (captured by position of the Skeleton) are saved, and replayed by the robot.

The patient will then have to reproduce the movement (in front of the Kinect), which will have to be repeated or not (according to the quality of the repetition).

How it works

- (Zero-th step : installing Kinect camera and pyKinect (Python library) on the computer. Already installed in the lab's computer)

- First step : capturing the Skeleton (Kinect v2) as cartesian coordinates (x,y,z)

- Second step : converting the Skeleton data into Poppy robot's referential (angular)

NB :

- If you want to make Poppy rigid, we wrote a program that puts it to the zero position (ie all the motors' angles are set to zero): Init_poppy.py

- Don't forget to plug Poppy !

First step: getting the Skeleton

- Plug the Kinect camera to the computer before turning it on (or you will probably need to restart the computer)

- Connect the Kinect camera

The Kinect recovers the position of the following joints:

- Unordered List Item

Second step: the movement

All the programs we wrote are in C:\Users\ihsev\Documents\2015projetS5PoppyKine-VirginieNgoGuillaumeVillette\poppy-humanoid\software\poppy_humanoid/ folder

First option: manually save, convert, then play the movement

- Save the movement

- Convert the angles

- Make Poppy play the movement

Second option: Save then play the movement

How we proceeded

All the programs we wrote are stored in 2015projetS5PoppyKine-VirginieNgoGuillaumeVillette/poppy_humanoid/software/poppy_humanoid/ folder

First step: getting the Skeleton

We used the pykinect2 library in Python.

The code is written in poppy_kinect_save_mouvement.py file

For each movement, a .txt file is saved in ⁄exercices⁄<name_of_the_exercise>/ folder, in <name_of_the_exercise_x>.txt file (x starts from zero (first movement)).

A .txt file is a Python dictionnary stored with json format (in order to save it as a dictionnary and not just some text, and treat the data for the conversion)

The cartesian coordinates are written in the subdictionnary “positions”, and each time unit (depends on the framerate) has its own dictionnary

The quaternions are written in the subdictionnary “orientations”, and each time unit (depends on the framerate) has its own dictionnary

Basically, the structure of a .txt file (ie the dictionnary that stores the movement) is:

{

"framerate": "<framerate>",

"orientations": { "<time1>": { "<joint1>": [x,y,z,w], "<joint2>": [x,y,z,w] }, "<time2>": { "<joint1>": [x,y,z,w], "<joint2>": [x,y,z,w] } },

"positions": { "<time1>": { "<joint1>": [x,y,z], "<joint2>": [x,y,z] }, "<time2>": { "<joint1>": [x,y,z], "<joint2>": [x,y,z] } }

}

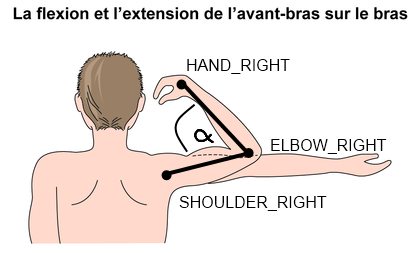

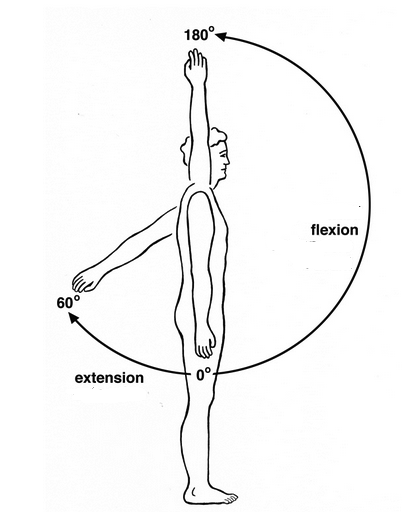

Second step: convert the movement

The Kinect camera captured the cartesian coordinates of the person who was doing the movement. However, to make Poppy play a movement, you need to “send” it angles for each of its motors.

“Poppy, move your r_shoulder_x motor by 30 degrees (please)!”

Well, you need to convert the cartesian coordinates into angles. How ?!

You can find our approximations in Kinect_to_Poppy_angles.py file.

For each movement, a .txt file is saved in ⁄exercices⁄<name_of_the_exercise>/ folder, in <name_of_the_exercise_x>_poppy.txt file (x starts from zero (first movement)).

A .txt file is a Python dictionnary stored with json format (in order to save it as a dictionnary and not just some text, and treat the data for Poppy to play the movement)

Basically, the structure of a .txt file (ie the dictionnary that stores the movement) is:

{

"framerate": "<framerate>",

"positions": { "<time1>": { "<motor1>": [angle,0], "<motor2>": [angle,0] }, "<time1>": { "<motor1>": [angle,0], "<motor2>": [angle,0] } }

}

For further improvements

The angles we calculated are unfortunately not accurate enough for complex movements. That is why using quaternions can be another way to improve the conversion.

Moreover, GMM (Gaussian mixture models) and GMR (Gaussian mixture regressions) can be used to synthetize samples of the same movement, and make Poppy then the patient reproduce it.